Everything You Know About AI Is Wrong… and Right!

Gabriel Sanchez

End Point Strategies

@gabrielsanchez

The most interesting thing about AI is that we’ve been using it for years without realizing it.

We asked Waze to guide us through traffic long before we knew the phrase machine learning. We used Zillow to find homes that matched our budgets, commutes, and desired level of walkability. We let algorithms curate our playlists, recommend restaurants, and filter spam. We've trusted AI, technically, Artificial Narrow Intelligence, all along.

We just didn’t call it that.

In the words of Dana Carvey’s Grumpy Old Man, “That’s the way it was… and we liked it!” As a social behavior change communicator, I can tell you: no one embraces change – at first.

The arrival of generative AI, tools like ChatGPT, Perplexity, and Gemini, don’t just assist, they create. And this shift is forcing us to confront the untapped power and unknown future of what these tools are, what they mean, and whether we’re using them… or being used by them.

"In my day, we didn’t have these fancy bespoke generative AI platforms. We had Billy down the block. Billy knew everything... and if he didn’t... he made it up. And we believed him! That's the way it was, and we liked it!"

Now “AI slop” is in the lexicon. And everywhere you look, someone’s offering an “expert” opinion on a technology we’re all still figuring out. But the learning curve isn’t as steep as it feels. We already have instincts for this. We know how to ask good questions. We know how to refine a search. We know how to spot a useful result. And more of us are learning how to apply those instincts to generative tools.

So, what are getting right? And what are we getting wrong?

What We Get Wrong About AI

That it’s new. It’s not. We’ve been using AI-powered tools for over a decade, they just weren’t branded that way.

That it’s either magic or menace. It’s neither. It’s a tool, not a god. And like any tool, it reflects the hand that wields it.

That it replaces creativity. It doesn’t. Originality isn’t about who typed the first draft. It’s about voice, perspective, framing.

That you need to be an expert to use it. You don’t. If you can Google, you can prompt.

That using AI is cheating. It’s not. It’s like using a calculator for math or spellcheck for writing. You still have to know what you’re doing. And if you don’t, it shows.

What We Get Right About AI

It’s a big deal. Generative AI is a foundational technology, a big step toward Artificial General Intelligence (think C-3PO, not Skynet). Your instincts are right though. This changes things.

It’s moving fast. New tools, updates, and capabilities are emerging constantly. The pace is real. Paging Dr. Ian Malcom: "[AI developers are] so preoccupied with whether or not they could, they [aren't stopping] to think if they should." (Okay, now you can think Artificial Superior Intelligence like Skynet and The Matrix.)

It raises real questions. About ethics, accuracy, bias, privacy, labor and resource consumption. We’re right to be paying attention, and calling it out.

It can be helpful. Whether summarizing notes or sparking ideas, it really can make us more efficient.

It still needs us. AI is only as good as the context, prompts, and judgment behind it. Human thinking still leads. Will the "snake of AI slop" eat itself?

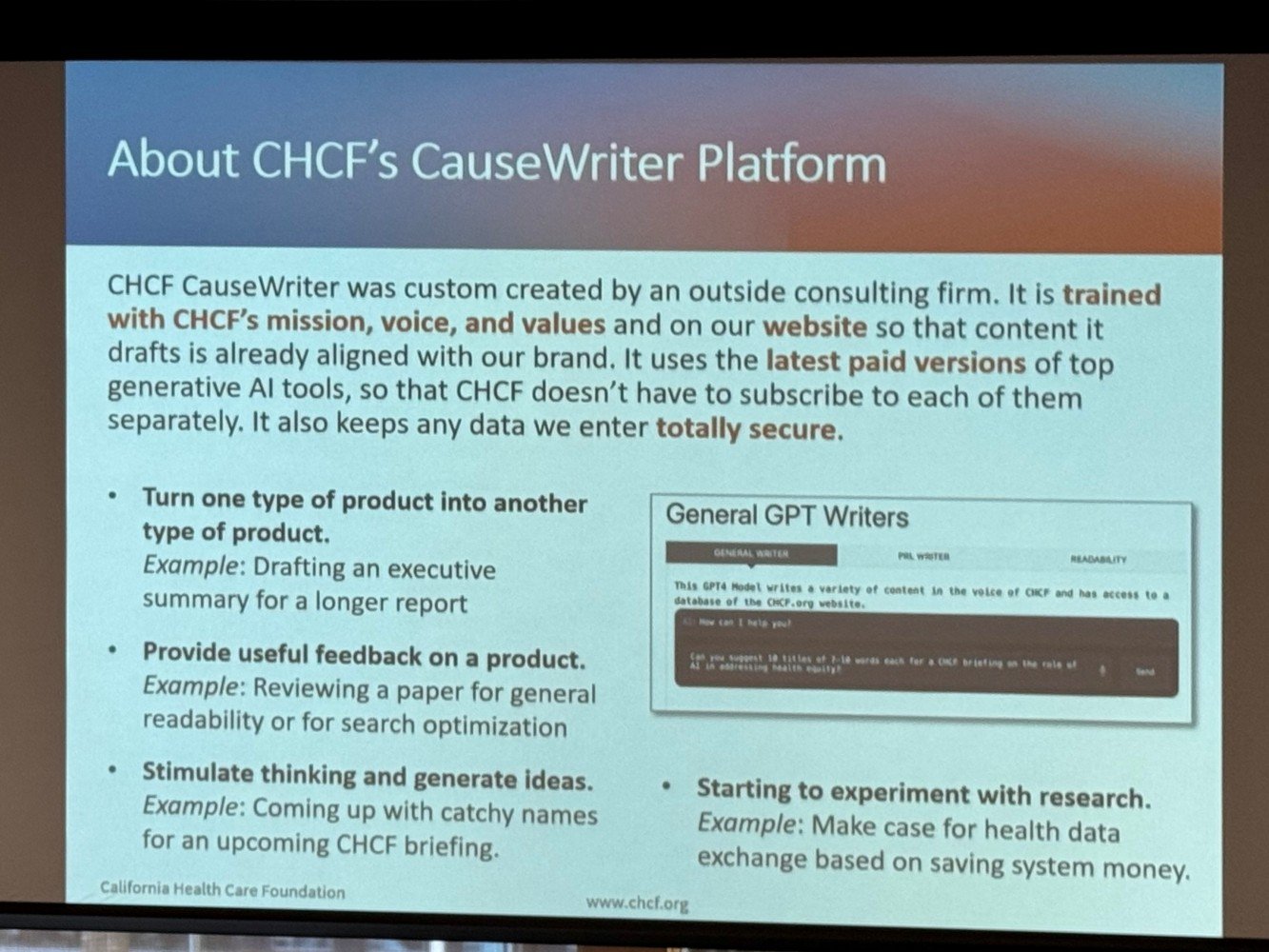

At a recent AI Summit hosted by The Communications Network, what struck me most wasn’t the demos, it was the intentionality. The California Health Care Foundation developed a custom AI tool trained on its own internal documents – blogs, summaries, strategic materials. It doesn’t replace their voice – it extends it.

CHCF’s early-stage adoption is grounded in practical priorities: use AI to improve impact, manage risk, and learn where it realistically adds value. They’re approaching it in a way that is focused, cost-effective, and rooted in mission over hype.

That’s where I think this is headed. As familiar and mundane as email or the web. Not magic. Not menace. Just… part of the workflow.

This bespoke platform, pulling from a variety of AI tool to create its outputs, is amazing.

I’ve heard a few metaphors for how to think about this shift. My favorite is the escalator: you can stand still and let it carry you, or you can add your effort and walk, getting to where you want to go faster.

There are also a couple of working models for "walking with AI" I’ve seen for integrating these tools without losing your voice:

The 30/70 model: Let AI draft the first 30%, then refine it with your perspective, judgment, and tone (the remaining 70%).

The 10/80/10 model: Spend 10% of your effort crafting a strong prompt, let AI handle 80% of the execution, and use the final 10% to edit and shape the results.

Either way, your value doesn’t come from skipping steps. It comes from knowing which steps to take, and how to take them well.

I understand the fears. I’ve felt them too. Watching AI produce something that looks like your work in seconds can be jarring. Seeing it rely on sources that are inaccurate, or worse, downright racist, is alarming. But once I understood these tools’ limits, once I took their measure, I realized they weren’t a threat to what I do. They were a way to do it better.

We’ve made these transitions before. From Thomas Guides to Google Maps. From dial-up to mobile. Every shift came with disruption. This one will too. (Think Diffusion of Innovation.)

And there are legitimate concerns about resource consumption, ethics, and inherent and systemic bias. But I’m no longer afraid. I’m paying attention. I’m learning. And I’m walking, not running, up the escalator.